(1) A robot may not injure a human being or, through inaction, allow a human being to come to harm

Asimov, Three Laws of Robotics (I, Robot)

(2) A robot must obey orders given to it by human beings, except where such orders would conflict with the First Law

(3) A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

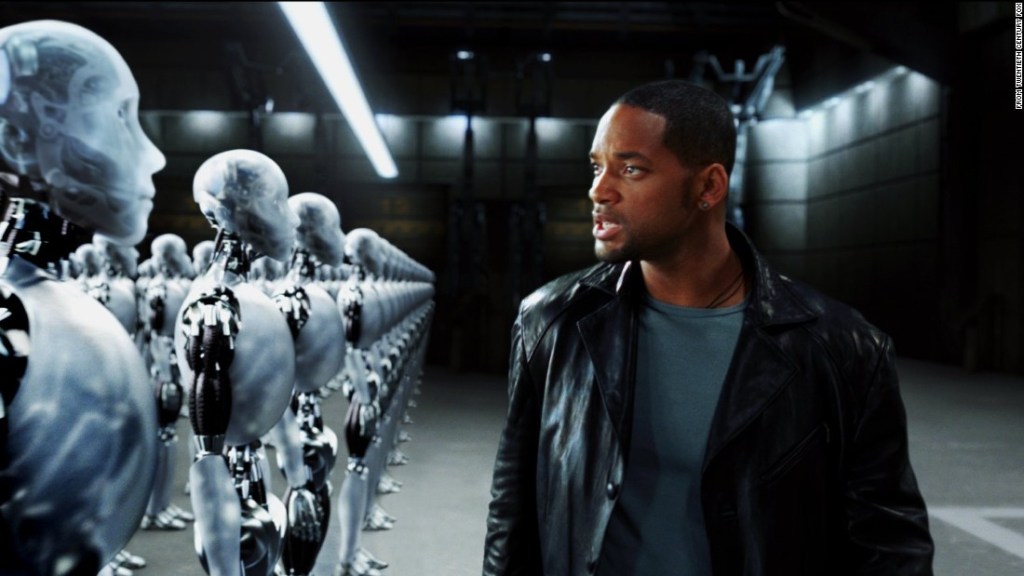

Memories of watching Will Smith on the movie I, Robot flood my mind as I read this article from MIT: What Buddhism can do for AI Ethics. One of the robots, Sonny, was accused of killing his creator, Lanning, which baffled the investigating scientists who embedded the Three Laws of Robotics in the robots.

Members from the Institute of Electrical and Electronics Engineers (IEEE) develop guidelines for how artificial intelligence (AI) should be developed.

Soraj Hongladarom, professor of philosophy at the Center for Science, Technology, and Society at Chulalongkorn University in Bangkok, Thailand explores the disproportionate representation for those who establish the governing ethics for AI development. The guidelines were predominantly created with western values, however for AI to be more universal, eastern values need to be included.

❤

LikeLiked by 1 person

🤖😊 thanks!

LikeLiked by 1 person

Fascinating, especially since I sit firmly between “Western” and Buddhist cultures. But Hongladarom’s perspective seems open to many of the same problems that Isaac Asimov addressed in his “I, Robot” essays, if through a different route. For instance, Hongladarom states, “…ethical use of AI must strive to decrease pain and suffering.” Similar to Asimov’s First Law, a utilitarian interpretation of this could result in anything from restrictions to “risky” behaviors, to organ harvesting, to preemptive warfare. And what defines “pain” or “suffering”. Might an AI see this as compelling lobotomy, euthanasia or population control?

Just getting an AI to approximate the incredibly complex ethical evaluations humans base in the assumptions of free-will and a shared subjective experience is going to be difficult. Achieving spiritual perfection may be a tall order.

LikeLiked by 1 person

I’m between two worlds as well. You brought up some intriguing points and it’s somewhat frightening to think about. I am currently reading ‘The Power Of Ethics: How to Make Good Choices in a complicated World’ by Susan Liautaud. The current scenarios she has brought up are complex and I can’t imagine how AI would process. I hope to share my reflections on her book once I finish reading.

LikeLiked by 1 person